Highlight

Successful together – our valantic Team.

Meet the people who bring passion and accountability to driving success at valantic.

Get to know usDue to the continuous development of technologies and the increase in data volumes, SAP is constantly innovating within the Datasphere to meet the needs of businesses at all times.

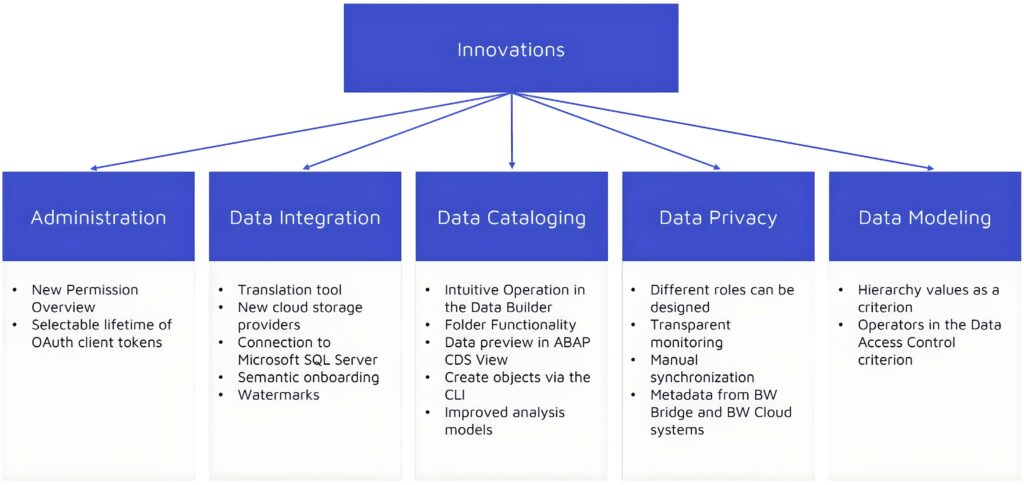

Since the publication of the last blog post What’s new in SAP Datasphere a few weeks ago, this blog will present the updates that have occurred since then. The focus is on the areas of Data Integration, Data Modeling, Data Cataloging, Administration, as well as Data Privacy and Protection.

Administration:

To further enhance the user experience, a new permissions overview page has been introduced in the administration area. In this overview, users with the global role of DW Administrator can view all users, roles, and spaces within the tenant and understand the relationships between them.

To increase efficiency, users have the option in the Datasphere to control the lifespan of the OAuth client token. Furthermore, the administration of the Datasphere is significantly simplified by allowing users to assign themselves roles for specific application areas.

Data Integration:

The Datasphere sets new standards in data integration by introducing a translation tool. With this tool, SAP Analytics Cloud stories can be made available worldwide by translating the metadata through the Datasphere. The “Translation” permission enables access to a dashboard and the translation of metadata such as business names and column names for dimensions and analysis models for stories into a variety of languages.

Recently, it has also become possible to select additional cloud storage providers as targets for replication flows. The scope has been expanded to include the following cloud storage providers and corresponding connection types:

At the same time, the possibility was provided to use local tables from the SAP Datasphere as a source object for a replication flow.

In the future, it will be possible to create a connection to Microsoft SQL Server (On-Premise) in the Datasphere. This DBMS enables analysis, business intelligence, and machine learning scenarios. Both remote tables and data flow features are supported. The following data access methods are offered for remote tables:

Transformation flows are used to load data into a target table. Depending on the requirement, this flow can be run several times, for example, when selecting Delta Loading. This functionality has been supplemented with so-called watermarks. Watermarks correspond to a timestamp in the source table and are used to track the data being transferred. If the watermark is reset, the system will load the data into the target table again next time and annotate it with a timestamp.

A holistic innovation is the Semantic Onboarding. This supports the import of semantic objects from other SAP systems as well as the Content Network. Furthermore, the Public Data Marketplace and other marketplaces can be used as sources.

Connections to packages can be set up either via the transport app or when creating the connections. As a result, connections can be exported and imported via the transport app.

The Datasphere responds to the growing requirements regarding the BW Bridge and therefore offers the possibility to add source tables from SAP BW Bridge spaces to transformation flows.

There is an exciting development in task chains: users will be able to be notified by email if an error occurs during the initialization or preparation of the task chain, even before the actual process of the task chains starts. Additionally, replication flows can be included in the task chains.

Data Modeling:

In the updates regarding Data Modeling, a more intuitive operability of the Data Builder for the user was considered. It is possible to filter for specific objects on the Data Builder page. This procedure is particularly useful for extensive spaces to reduce the search effort.

In our view, a particularly practical update has been made to the packages. Now, modelers can add additional object types directly from the relevant editor—such as Data Flows, Replication Flows, and Transformation Flows—to a package within a space, including from data access controls. This enhancement streamlines the process, making it more efficient and user-friendly.

The Data Builder now offers the possibility to display tables, views, and intelligent lookups in the new repository tab in the source browser. Additionally, the search for specific tables can be optimized with filters. Objects with semantic correlation can be grouped in different folders. Folders can be created in the Repository Explorer, Business Builder, or Data Builder.

For ABAP CDS Views, users can preview data in the Data Flow using the preview function in the Datasphere. For this, a source connection to SAP S/4HANA Cloud 2302, SAP S/4HANA on-premise 1909, or higher must be present.

With the latest changes in data modeling, the Datasphere now offers improved user-friendliness for analysis models. In addition to the possibility of deleting several dimensions at once, the details of the analysis models now show the dependencies of metrics, attributes, and variables. When creating new metrics, existing metrics can be copied in the future.

The latest updates also aim to create and update modeling objects via the command line (CLI). Various modeling objects of any type can be created via the command line. The prerequisite for this is an existing json file.

Data Cataloging:

With the latest update, data cataloging in the Datasphere has undergone significant optimization. An improved user experience is enabled by providing impact and lineage diagrams that can display assets from SAP Datasphere and the SAP BW Bridge. In addition, metadata for some objects from SAP BW Cloud systems can be monitored and extracted into the catalog in the Datasphere, such as InforArea, InfoObject or DataStore Object (Advanced).

Another focus of SAP is on the Data Catalog to continue supporting businesses in building and implementing a data governance strategy that fits their corporate goals. As an entry point, there is the option to define various roles and thus empower users with different functional scopes. For example, it can be explicitly determined which role can read, delete, or update an asset, glossary, or glossary object. Each company can individually design these roles, depending on the departments or the employee’s area of responsibility.

Furthermore, the monitoring page has been updated to make the connections to the source systems more transparent. In the future, this will enable users to view all data and analytical objects in the extraction summary. This leads to a clearer representation of objects that, for example, have not been synchronized.

In addition, SAP has attempted to reduce the amount of data transferred by users per load. By defining users authorized for the source system, the extraction of metadata from the source system can be released and the scope determined.

The newly introduced manual synchronization also builds on this principle. If a manual synchronization is performed on an existing source system, only the objects that have been added or changed since the last manual synchronization will be updated.

Data Privacy and Protection:

In the area of Data Privacy and Protection, two aspects have been added to protect data from unauthorized access.

Firstly, it is possible to pass hierarchy values to the criteria of the Data Access Controls. This allows users to view only those records that are associated with the corresponding hierarchy value. Subsequently, the user sees all descendants for which they are authorized according to the permission entity.

Secondly, the Data Access Control has also been supplemented so that criteria can be defined as an operator or value pair. As in the scenario described above, the user is only shown the records for which the criterion described with AND or OR, for example, applies.

Outlook

The landscape of data management and analysis is constantly evolving, and the Datasphere is at the forefront of this development.

In summary, since the last blog post, numerous innovative and user-friendly updates have been added to the SAP Datasphere. In terms of Data Integration, many new connections to source systems have been added, and in Data Modeling, the user has been given the opportunity to optimally organize their entities, for example, using folders. The Data Catalog as well as Data Privacy and Protection contribute to the company being able to achieve its own formulated goals and comply with legally prescribed frameworks.

In the coming months, further interesting updates are expected. The exact goals can be followed in the roadmap (SAP Road Map Explorer).

In our opinion, the SAP Datasphere has developed into a Data Fabric over the last year. The progress for this year looks promising and is intended to particularly facilitate the management of data.

Live Demo

We look forward to being able to introduce you to the SAP Datasphere in detail!

Don't miss a thing.

Subscribe to our latest blog articles.